Check Node Utilization (CPU, Memory, Processes, etc.)

You can check the utilization of the compute nodes to use Kay efficiently and to identify some common mistakes in the Slurm submission scripts.

To check the utilization of compute nodes, you can SSH to it from any login node and then run commands such as htop and nvidia-smi. You can do this only for the compute nodes on which your Slurm job is currently running.

Before using the SSH command on the login node, you should generate a new SSH key pair on the login node on Kay and add it to your authorized keys on Kay. This needs to be done only once.

# RUN ON THE LOGIN NODE (ONLY ONCE)

# Generate a new SSH key pair.

# Press Enter 3 times for the prompts to accept the default values.

# This saves the key pair at the default location and doesn't set passphrase.

ssh-keygen -t ecdsa

# Copy the public key into list of authorized keys

cat ~/.ssh/id_ecdsa.pub >> ~/.ssh/authorized_keys

Now check the compute node name on which your Slurm job is running. For example, my job is running on n1.

# RUN ON THE LOGIN NODE # Check Slurm job queue to find the allocated node name [asharma@login2 ~]$ squeue -u $USER JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 476280 DevQ bash asharma R 1:06 1 n1

Now SSH to the compute node

# RUN ON THE LOGIN NODE # SSH to compute node. Note that the hostname in the prompt changes. [asharma@login2 ~]$ ssh n1 [asharma@n1 ~]$

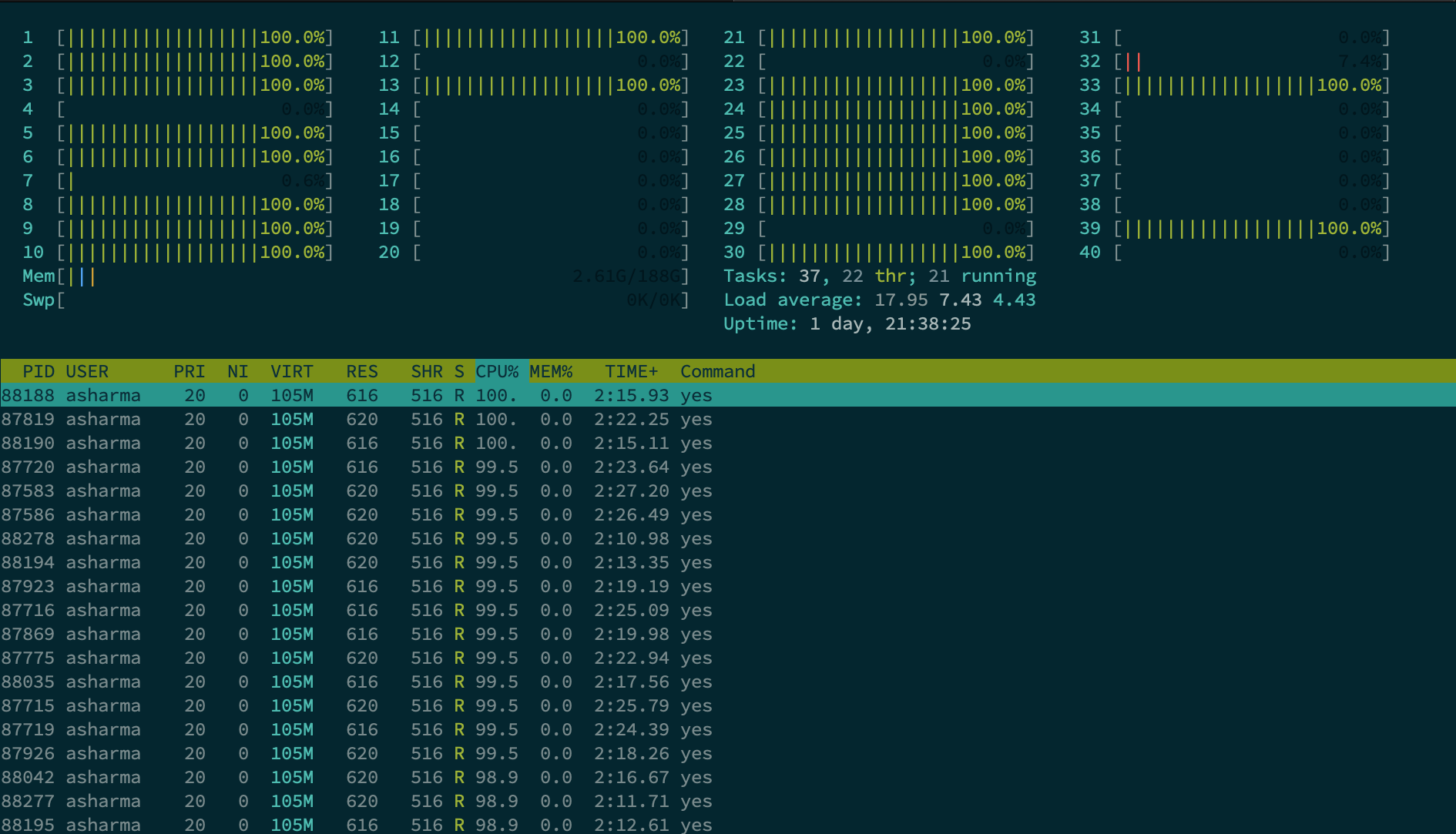

To check the utilization of standard nodes, you can run htop

# RUN ON THE COMPUTE NODE (STANDARD NODES) # Get utilization [asharma@n1 ~]$ htop

It will show a graphical representation of CPU and Memory utilization along with details of each process. For more information about htop, see htop homepage

To check the utilization of GPU nodes, you can run nvidia-smi

# RUN ON THE COMPUTE NODE (GPU)

# Load the cuda Environment Module

[asharma@n361 ~]$ module load cuda/9.2

# Get utilization

[asharma@n361 ~]$ nvidia-smi

It will show a textual representation of GPU and GPU Memory utilization along with details of each process running on both the GPUs of the node.